Kubernetes backup with Velero + MinIO

Here I describe a simple solution for backing up Kubernetes resources.

The goal is to have an easy way to back up Kubernetes resources to

external storage outside of Kubernetes. To do this, I used my storage

server and installed an S3-compatible storage service, MinIO.

Velero is installed within the cluster as a backup service, along

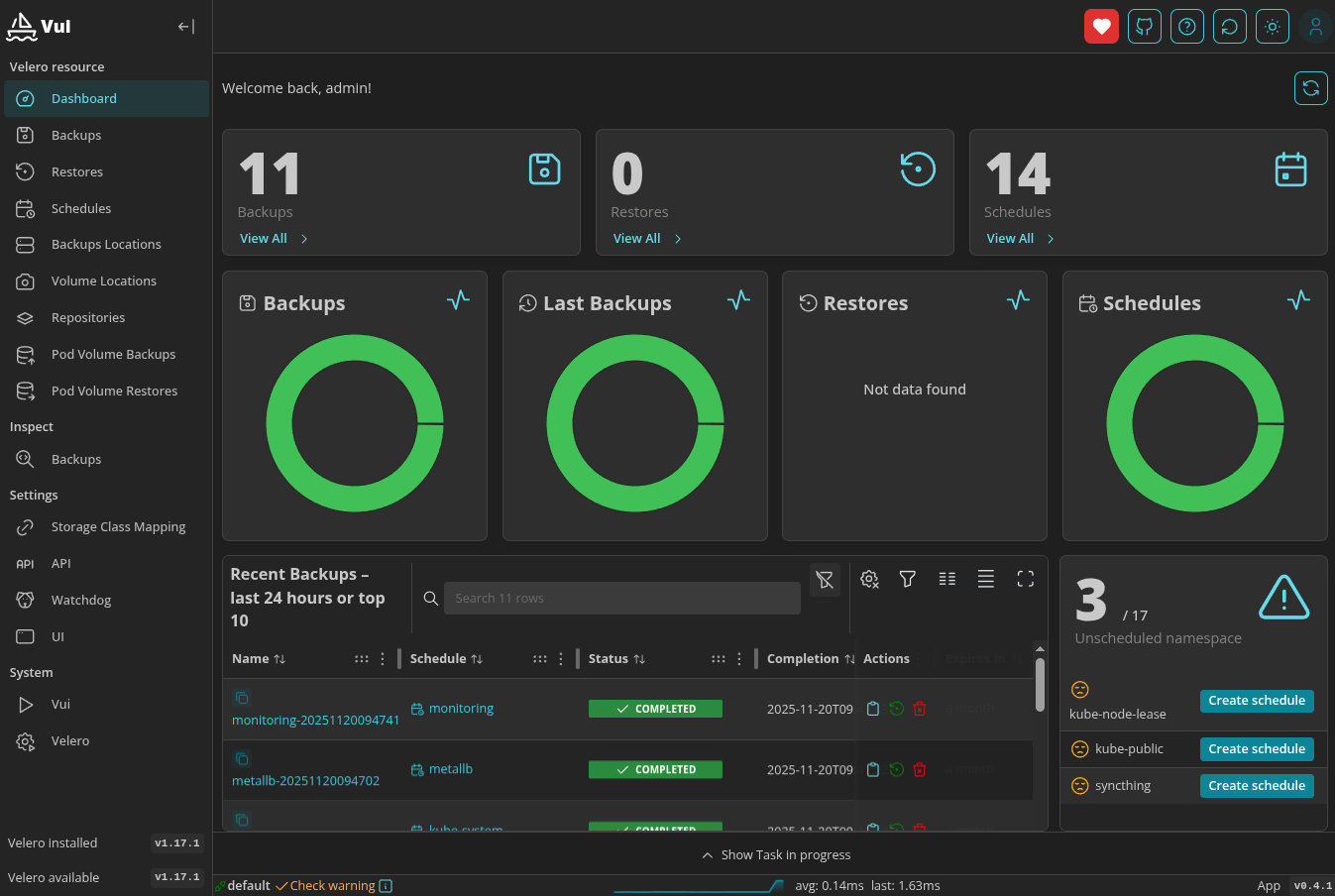

with a convenient UI called velero-UI, or vui for short.

This is intended to be a basis or a starting point for operating Velero.

Here’s what you need in detail:

- Kubernetes Cluster (On-Prem)

- Storage Server for MinIO (here IP is 192.168.123.123)

- Velero CLI v1.17.1

- Velero AWS Plugin v1.13.1

But let’s get started.

1. MinIO installation on storage system (Debian)

# Create systemuser and folders

sudo useradd -r minio-user -s /sbin/nologin

sudo mkdir -p /usr/local/share/minio /etc/minio

sudo chown -R minio-user:minio-user /usr/local/share/minio /etc/minio

# Fetch MinIO binary

wget https://dl.min.io/server/minio/release/linux-amd64/minio

chmod +x minio

sudo mv minio /usr/local/bin/

# Create MinIO environment file

cat <<EOF | sudo tee /etc/minio/minio.env

MINIO_ROOT_USER=minioadmin

MINIO_ROOT_PASSWORD=<strong_password>

MINIO_VOLUMES="/tank/minio"

MINIO_OPTS="--address 192.168.123.123:9000 --console-address 192.168.123.123:9001"

EOF

# Create a systemd service

cat <<EOF | sudo tee /etc/systemd/system/minio.service

[Unit]

Description=MinIO

Wants=network-online.target

After=network-online.target

[Service]

User=minio-user

Group=minio-user

EnvironmentFile=/etc/minio/minio.env

ExecStart=/usr/local/bin/minio server \$MINIO_OPTS \$MINIO_VOLUMES

Restart=always

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

sudo systemctl daemon-reload

sudo systemctl enable --now minio

sudo systemctl status minio2. Create MinIO bucket

# install mc

wget https://dl.min.io/client/mc/release/linux-amd64/mc

chmod +x mc

sudo mv mc /usr/local/bin/

# set an alias

mc alias set localminio http://192.168.123.123:9000 minioadmin <strong_password>

# create the bucket

mc mb localminio/velero-backups

# show thats installed

mc ls localminio3. Prepare Velero secret

cat <<EOF > ./credentials-velero

[default]

aws_access_key_id=minioadmin

aws_secret_access_key=<strong_password>

EOF4. Install Velero

This installs Velero into the Cluster, make shure you can connect to

the kubernetes api server

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.13.1 \

--bucket velero-backups \

--secret-file ./credentials-velero \

--backup-location-config region=minio,s3ForcePathStyle=true,s3Url=http://192.168.123.123:90005. Check backup storage location

velero backup-location get

velero backup-location validate default6. Test-Backup

velero backup create test-backup --include-namespaces default

velero backup get

velero restore create --from-backup test-backup7. Summary

at this point you have a working Velero+MinIO setup, you can backup and restore with the cli.

Setup Velero GUI

After this installation, the velero ui can be accessed at velero.hq.c1.itknecht.de (please change it to your infrastructure)

and the default credentials are admin/admin.

Add Helm Repo

helm repo add seriohub https://seriohub.github.io/velero-helm/

helm repo updateInstall the VUI Chart (Single-Cluster)

helm install vui seriohub/vui --namespace vui --create-namespace -f values.yamlvalues.yaml

# Global

global:

veleroNamespace: velero

core: false

agentMode: false

clusterName: default

k8SInclusterMode: true

# API Service configuration

apiService:

deployment:

image:

registry: docker.io

repository: dserio83/velero-api

tag: 0.3.1

imagePullPolicy: IfNotPresent

replicas: 1

config:

apiTokenExpirationMin: 60

apiTokenRefreshExpirationDays: 7

apiEnableDocumentation: false

apiEndpointPort: 8001

apiEndpointUrl: 0.0.0.0

apiRateLimiterCustom1: Security:xxx:60:100

apiRateLimiterL1: "60:100"

debugLevel: info

veleroInspectFolder: /tmp/velero-inspect-backups

origins1: '*'

securityDisableUsersPwdRate: 1

securityPathDatabase: ./data

inspectBackupEnabled: false

secret:

clientKey:

existingSecret: ""

defaultAdminUsername: admin

defaultAdminPassword: admin

resticPassword: static-passw0rd

securityTokenKey:

natsUsername: ""

natsPassword: ""

nats:

enabled: false

# UI Service configuration

uiService:

deployment:

image:

registry: docker.io

repository: dserio83/velero-ui

tag: 0.3.1

imagePullPolicy: IfNotPresent

replicas: 1

config:

nextPublicRefreshDatatableAfter: 1500

nextPublicRefreshRecent: 5000

nextPublicLoggerEnabled: false

cacheTTL: 180

# Watchdog Service configuration

watchdogService:

deployment:

image:

registry: docker.io

repository: dserio83/velero-watchdog

tag: 0.1.8

imagePullPolicy: IfNotPresent

replicas: 1

ports:

- name: watchdog-api

port: 8001

protocol: TCP

targetPort: 8001

config:

apiEndpointUrl: 0.0.0.0

apiEndpointPort: 8001

debug: false

debugLevel: info

backupEnable: true

scheduleEnable: true

expiresDaysWarning: 29

processCycleSec: 300

notificationSkipCompleted: true

notificationSkipInProgress: true

notificationSkipRemoved: true

notificationSkipDeleting: true

sendStartMessage: true

sendReportAtStartup: false

reportBackupItemPrefix: ""

reportScheduleItemPrefix: ""

apprise: ""

emailAccount: <email>

emailEnable: false

emailPassword: <pwd>

emailRecipients: <recipients>

emailSmtpPort: <smtp-port>

emailSmtpServer: <smtp-server>

telegramChatId: <chat-id>

telegramEnable: false

telegramToken: <token>

slackChannel: <channel-id>

slackEnable: false

slackToken: <token>

# secret

secret:

existingSecret: ""

# CronJobs configuration

cronJobs:

report:

failedJobsHistoryLimit: 0

schedule: "0 8 * * *"

successfulJobsHistoryLimit: 0

jobSpec:

image:

registry: docker.io

repository: dserio83/velero-watchdog

tag: 0.1.8

imagePullPolicy: IfNotPresent

# Core Service configuration

coreService:

deployment:

image:

registry: docker.io

repository: dserio83/vui-core

tag: 0.1.2

imagePullPolicy: IfNotPresent

imagePullSecrets: []

podSecurityContext:

enabled: false

seccompProfile:

type: RuntimeDefault

securityContext:

enabled: false

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 65534

config:

# -- Token expiration in minutes after creation

apiTokenExpirationMin: 60

# -- Token refresh expiration in days

apiTokenRefreshExpirationDays: 7

# -- Port for the API socket binding

apiEndpointPort: 8001

# -- Host address for the API socket binding

apiEndpointUrl: 0.0.0.0

# -- Custom rate limiter rule 1 (format: label:type:seconds:max_requests)

apiRateLimiterCustom1: Security:xxx:60:100

# -- Rate limiter level 1 (format: seconds:max_requests)

apiRateLimiterL1: "60:100"

# -- Debug log level (debug, info, warning, error, critical)

debugLevel: info

# -- Allowed origins for CORS

origins1: '*'

# -- If true, weak passwords are accepted

securityDisableUsersPwdRate: 1

# -- Path to SQLite database file used to store data

securityPathDatabase: ./data

# Secrets used by the Core service

secret:

# -- Optional: Name of existing Kubernetes Secret

existingSecret: ""

# -- Default admin username (used for first login)

defaultAdminUsername: admin

# -- Default admin password (used for first login)

defaultAdminPassword: admin

# -- Username for connecting to the NATS server

natsUsername: ""

# -- Password for connecting to the NATS server

natsPassword: ""

# bindPassword

auth:

enabled: true

mode: BUILT-IN

exposure:

mode: ingress

ingress:

ingressClassName: nginx

metadata:

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/issuer: step-issuer

cert-manager.io/issuer-kind: StepClusterIssuer

cert-manager.io/issuer-group: certmanager.step.sm

spec:

tls:

- hosts:

- velero.hq.c1.itknecht.de

secretName: velero-ui-tls

Figure 1: Velero VUI